Fully Automated Storage Tiering:

FAST allows administrator to define policies and automate the movement of Luns between the Tiers based on the priority.

Advantage of FAST:

- Based on the load the Luns can be placed to High performance Storage tiers EFD.

- Low Used storages are moved to SATA disk group.

Storage Tiers: Collection of same type of storage.

There are 3 Kind of storage tiers available in VMAX:

- EFD

- FC

- SATA

VNX contains 3 types of storage Tiers:

- EFD

- SAS

- NL SAS

We won’t focus on FAST implementation for Thick devices because now a days provisioning of Thick devices are not advised.

FAST VP:

FAST VP is used for the implementation of FAST in Virtual provisioning.

Let’s see How FAST VP works:

Let’s see How FAST VP works:

- There will be Thin devices being created and allocated to a certain FAST policy.

- Based on the FAST policy defined the highly utilized data from Sub Lun levels are identified.

- Sub Lun highly utilized are moved to Storage Tier Flash.

- Sub Lun underutilized are moved to SATA storage Tier.

- The FAST VP will identify highly utilized Sub Luns based on the Symmetrix Microcode and FAST controller.

Components of FAST VP:

Components description:

EMC Symmetrix has two components one is Microcode which resided in Symmetrix Operating system and other is FAST controller residing in Service process.

- Performance Data Collection:

Constant performance and CPU utilization of Thin Luns are determined in Sub Lun Levels.

- Performance Data Analysis:

Performance data collected are analyzed in the FAST controller.

- Intelligent Tiering Algorithm:

Data collected through Micro code and Analysis report generated by FAST controller are used by Intelligent Tiering Algorithm to issue a Sub Lun movement to VLUN VP data Movement Engine.

- Allocation Compliance algorithm:

Enforces upper limit of Storage Tier can be used for Sub Lun Data Movement for each Storage groups.

- VLUN VP Data Movement Engine:

Based on the Intelligent Tiering algorithm the Extent of data are moved between Tiers.

FAST VP has two modes of operation:

- Automatic: Data Movement and Data Analysis are continuously performed.

- Off Mode: Only performance statistics will be collected, No data movements will take place.

Elements of FAST VP:

Storage Tier:

Collection of drive technology like EFD, FC, SATA.

Storage Group:

Collection of host accessible devices.

FAST Policy:

Percentage of storage capacity between the storage tiers can be used by storage group.

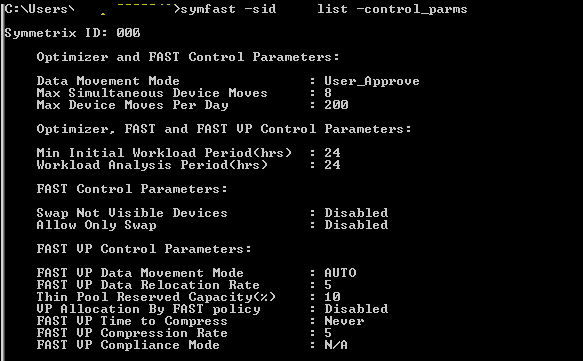

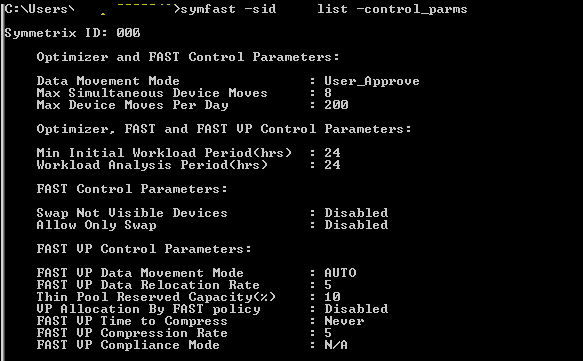

Control Parameters for FAST VP:

- Movement Mode: Automatic or Off

- Relocation rate: Amount of data that can be moved at a Time. They are measured from 1 to 10, Default value is 5; 1 is the highest value and 10 is the lowest value.

- Reserved capacity Limit: Percentage of Virtual pool reserved for Non FAST activity. If we reach this level then FAST movement will not be performed.

- Workload analysis time: Amount of work load analysis samples to be collected.

- Initial period: Minimum amount of work load analysis needs to be completed before analyzing the sample.

Sample FAST VP control Parameters:

Time Windows for FAST VP:

- Performance Time Window:

Collecting performance data 24×7. This time window can be changed but not recommended by EMC.

- Move Time window:

Time window during which sub Luns can be moved.

FAST implementation steps:

- Enable FAST VP.

- Set Control Parameters.

- Create Storage Tier.

- Create FAST Policy.

- Associate storage group to FAST Policy.

- Enable Time Windows setting.

Pre Checks:

Check for FAST VP licenses:

License can be checked using below command:

Symlmf list –type emclm –sid XXX

Always check with EMC before implementing FAST VP and get the suggestion for control parameters setting and time windows setting.

- Enable FAST VP:

Command to enable FAST VP.

Symfast –sid XX enable –vp

To List the states of FAST VP:

Symfast –sid XX list –state

- Set Control Parameters settings:

To List control parameters:

Symfast –sid xxx list –control_parms

To change control parameters setting:

symfast -sid XXX set -control_parms -mode AUTO_APPROVE -max_simult_devs 8 -max_devs 240 -min_perf_period 2 -workload_period 24 -vp_data_move_mode AUTO -vp_reloc_rate 5 -pool_resv_cap 20 -vp_allocation_by_fp disable

- Create Storage Tier:

Symtier is the command used for creating Storage tier.

RAID protection for Tier creation:

RAID 0 = -tgt_unprotected

RAID 1 = -tgt_raid1

RAID 5 = -tgt_raid5 -tgt_prot 3+1, -tgt_raid5 -tgt_prot 7+1

RAID 6 = -tgt_raid6 -tgt_prot 6+2 , -tgt_raid6 -tgt_prot 14+2

We had already created three pools named EFD,FC,SATA.

Creating EFD Tier:

symtier -sid xxx create -name EFD_VP_Tier -tgt_raid5 -tgt_prot 7+1 -technology EFD -vp -pool PoolName –EFD

Creating FC Tier:

symtier -sid xxx create -name FC_VP_Tier -tgt_raid5 -tgt_prot 3+1 -technology FC -vp -pool PoolName –FC

Creating SATA Tier:

symtier -sid xxx create -name FC_SATA_Tier -tgt_rai6 -tgt_prot 6+2 -technology SATA -vp -pool PoolName –SATA

To List Tiers:

Symfast –sid XXX list

4. Create FAST Policy:

Create FAST VP policy.

symfast -sid xxx -fp create -name EFD_VP

Add Storage Tiers to FAST Policy.

symfast -sid xxx -fp add -tier_name EFD_VP_Tier -max_sg_percent 100 -fp_name EFD_VP

symfast -sid xxx -fp add -tier_name FC_VP_Tier -max_sg_percent 20 -fp_name EFD_VP

symfast -sid xxx -fp add -tier_name SATA_VP_Tier -max_sg_percent 10 -fp_name EFD_VP

Maximum 300% for Storage tier can be allocated to a FAST Policy.

To list FAST VP policy:

Symfast list –sid 606 –fp -vp

5. Associate storage group to FAST Policy:

Storage groups will be created while Auto provisioning for a host.

Associate a Storage group to a FAST policy.

symfast -sid xxx associate -sg Storage_group -fp_name EFD_VP -priority 2

Storage group associated with fast policy will be assigned a priority between 1 to3. 1 is highest, 3 is lowest and 2 is default priority.

To List association:

symfast -sid xxx list –association

6. Enable Time Windows setting:

To List Time window:

Symtw list –sid XXX

To Change the Move Time Window setting:

symtw -sid XXX add -type MOVE_VP -days mon,tue,wed,thu,fri,sat,sun -start_time 18:00 -end_time 24:00 –inclusive

To Change performance Time Window:

symtw -sid xxx -inclusive -type perf add -days Mon,Tue,Wed,Thu,Fri,Sat,Sun -start_time 00:00 -end_time 24:00

Mostly Performance time windows are not changed. Default time windows are preferred for performance time window setting.